Using Profiling in Elixir to Improve Performance

Elixir is all about performance. Say you have an app up and running with Elixir, but some parts aren’t working as fast as you would like them to.

That is where profiling comes in. Profiling tools usually walk you through the frequency and duration of function calls and where they spend their time. Erlang has impressive profile tooling available at its disposal.

In this post, we will look into three profiling tools in Elixir: cprof, eprof, and fprof.

Do I Need Profiling for My Elixir App?

Firstly, I want to clarify that you may not need profiling at all. As Donald Knuth wrote: ‘premature optimization is the root of all evil.’ Some operations just cannot be optimized.

For example, say you want to fetch something from your database. You issue a query and get a reply. This incurs a network round trip with some data. If you need all the data, the network round trip time cannot be optimized without moving your database closer to the app.

With that out of the way, let’s jump into profiling options.

Profiling with cprof in Elixir

cprof counts the number of times each function is invoked. It doesn’t profile the time spent in those functions, so it comes with the least amount of overhead.

Use it when you already have an estimate of your functions’ runtimes. You’ll see the frequency with which each function is invoked.

Let’s see how we can profile a Fibonacci number generator with cprof.

You can follow along with the code. It uses the Rosetta Code algorithm for Fibonacci sequence generation.

Jump into an IEx session and run cprof like this:

iex(15)> :cprof.start()

19244

iex(16)> FibonacciRosettaCode.list(10)

[0, 1, 1, 2, 3, 5, 8, 13, 21, 34, 55]

iex(17)> :cprof.analyse(FibonacciRosettaCode)

{FibonacciRosettaCode, 79,

[

{{FibonacciRosettaCode, :fibonacci, 3}, 54},

{{FibonacciRosettaCode, :fibonacci, 1}, 11},

{{FibonacciRosettaCode, :"-fun.fibonacci/1-", 1}, 11},

{{FibonacciRosettaCode, :module_info, 1}, 1},

{{FibonacciRosettaCode, :list, 1}, 1},

{{FibonacciRosettaCode, :__info__, 1}, 1}

]}

iex(18)> :cprof.stop()

19266First start cprof, run the code that you want to profile, and then call :cprof.analyse/1 to fetch the statistics for the given module. Several other options are available inside the :cprof module, like pausing profiling with :cprof.pause/0.

We can also use Mix’s wrapper task profile.cprof directly.

➜ mix profile.cprof -e "FibonacciRosettaCode.list(10)"

CNT

Total 113

FibonacciRosettaCode 77 <--

FibonacciRosettaCode.fibonacci/3 54

FibonacciRosettaCode.fibonacci/1 11

FibonacciRosettaCode."-fun.fibonacci/1-"/1 11

FibonacciRosettaCode.list/1 1

Enum 24 <--

Enum.reduce_range/5 12

anonymous fn/3 in Enum.map/2 11

Enum.map/2 1

...Here, we can see that the function fibonacci/3 is called the most times. So if we can somehow decrease the number of calls to that function or optimize the code inside it, we could improve performance.

eprof Profiling in Elixir

cprof measures counts while eprof measures the execution time (in addition to counts) spent inside each function. It has slightly more overhead than cprof. Use eprof when you want to find the most time-consuming functions.

The usage is very similar to cprof. You just need to start the profiler, run the code you want to profile and then call analyze to fetch the results.

Let’s analyze the results from the mix task profile.eprof on our sample Fibonacci generator.

➜ mix profile.eprof -e "FibonacciRosettaCode.list(10)"

# CALLS % TIME µS/CALL

Total 106 100.0 45 0.42

anonymous fn/0 in :elixir_compiler_1.__FILE__/1 1 0.00 0 0.00

:lists.reverse/1 1 2.22 1 1.00

:lists.reverse/2 1 2.22 1 1.00

FibonacciRosettaCode.fibonacci/1 11 4.44 2 0.18

Enum.map/2 1 4.44 2 2.00

Range.new/2 1 4.44 2 2.00

FibonacciRosettaCode."-fun.fibonacci/1-"/1 11 8.89 4 0.36

FibonacciRosettaCode.list/1 1 8.89 4 4.00

:erlang.apply/2 1 8.89 4 4.00

anonymous fn/3 in Enum.map/2 11 13.33 6 0.55

Enum.reduce_range/5 12 13.33 6 0.50

FibonacciRosettaCode.fibonacci/3 54 28.89 13 0.24Here we can see that fibonacci/3 is again the most time-consuming part of our program, consuming 28.89% of the total execution time and 0.24µS per call.

In addition, eprof also allows us to profile function calls across different processes. The usage is very simple. Let’s see an example:

:eprof.start_profiling([self()])

# Do some work

1..100 |> Enum.each(fn i ->

spawn(fn -> FibonacciRosettaCode.list(i + 1) end)

end)

:eprof.stop_profiling()

:eprof.analyze()You need to call start_profiling/1 with a list of processes to profile. By default, this also tracks any other processes started from a profiled process.

When you are done, call stop_profiling and run analyze to get the results. They should look something like this, with an entry for each process and the percentage of time it was busy:

****** Process <0.150.0> -- 37.26 % of profiled time ***

FUNCTION CALLS % TIME [uS / CALLS]

-------- ----- ------- ---- [----------]

io:request/2 3 0.00 0 [ 0.00]

...

****** Process <0.277.0> -- 0.05 % of profiled time ***

FUNCTION CALLS % TIME [uS / CALLS]

-------- ----- ------- ---- [----------]

'Elixir.FibonacciRosettaCode':fibonacci/3 2 0.00 0 [ 0.00]

'Elixir.FibonacciRosettaCode':fibonacci/1 3 3.03 1 [ 0.33]

'Elixir.FibonacciRosettaCode':'-fun.fibonacci/1-'/1 3 3.03 1 [ 0.33]

...The great thing about this is that you can run it in production systems by accessing the remote console. Just call start_profiling/1, wait some time to process the requests or manually trigger some requests you want to profile. Then call stop_profiling followed by analyze to get the results.

Using fprof in Elixir

The final inbuilt tool you can use to profile your applications is fprof. It is a comprehensive profiling tool that generates a trace file containing timestamped entries for function calls, process-related events, and garbage collection data.

You can then feed this trace file into other tools to visualize the results thoroughly or use :fprof.analyse like above to fetch function counts and execution times.

Running fprof through IEx is quite advanced compared to running cprof and eprof, but fprof allows tracing multiple processes.

Here is how you can generate a trace file for all processes:

:fprof.start()

:fprof.trace([:start, procs: :all])

# Do some work

1..100 |> Enum.each(fn i ->

spawn(fn -> FibonacciRosettaCode.list(i + 1) end)

end)

:fprof.trace(:stop)

:fprof.profile()

:fprof.analyse(totals: false, dest: 'prof.analysis')This generates a comprehensive trace of everything that went on during the run with:

CNT- the number of times a function is calledACC- the accumulated time spent in the function, including other function callsOWN- the time the function spent to be executed.

Here’s the full file (50 MB) if you are interested.

Like eprof, fprof is a great tool to profile applications in production directly. Note that this will significantly slow down the application, so be prepared for degraded performance during profiling and a huge trace file.

So you have the data now. If you are feeling adventurous, you can start digging through the file and looking for patterns.

Or you can use some tools to help visualize your data. A popular one is erlgrind. It converts the fprof file to cgrind format that you can open inside KCachegrind.

To convert the file, download erlgrind and then run src/erlgrind profile.fprof.

This will generate a prof.cgrind file that you can open to see graphs like this:

Profile and Collect Metrics from Your Elixir App in Production

eprof and fprof can assist you with profiling your application in production. But there are a couple of additional tools worth mentioning - Phoenix Live Dashboard and Recon.

Phoenix Live Dashboard

Phoenix’s live dashboard can provide a great, quick overview of metrics. While not exactly a profiler, it shows OS data and metrics from telemetry events and processes, among other things.

Here is an example of the dashboard in action:

Recon

Recon is another great library that can be dropped into any project and help diagnose problems in production. It works with very little overhead and provides production-safe tracing helpers.

You can simply install it by adding the recon dependency to mix.exs. You can then start tracing calls to any function by using :recon_trace.calls/3 like this:

:recon_trace.calls({FibonacciRosettaCode, :fibonacci, :return_trace}, 10)This will trace all calls made to FibonacciRosettaCode.fibonacci for up to 10 lines, with one line for function call arguments and one for the return value with timestamps.

There is a lot more to recon, though, so I suggest you check out the Recon homepage for advanced usage.

AppSignal

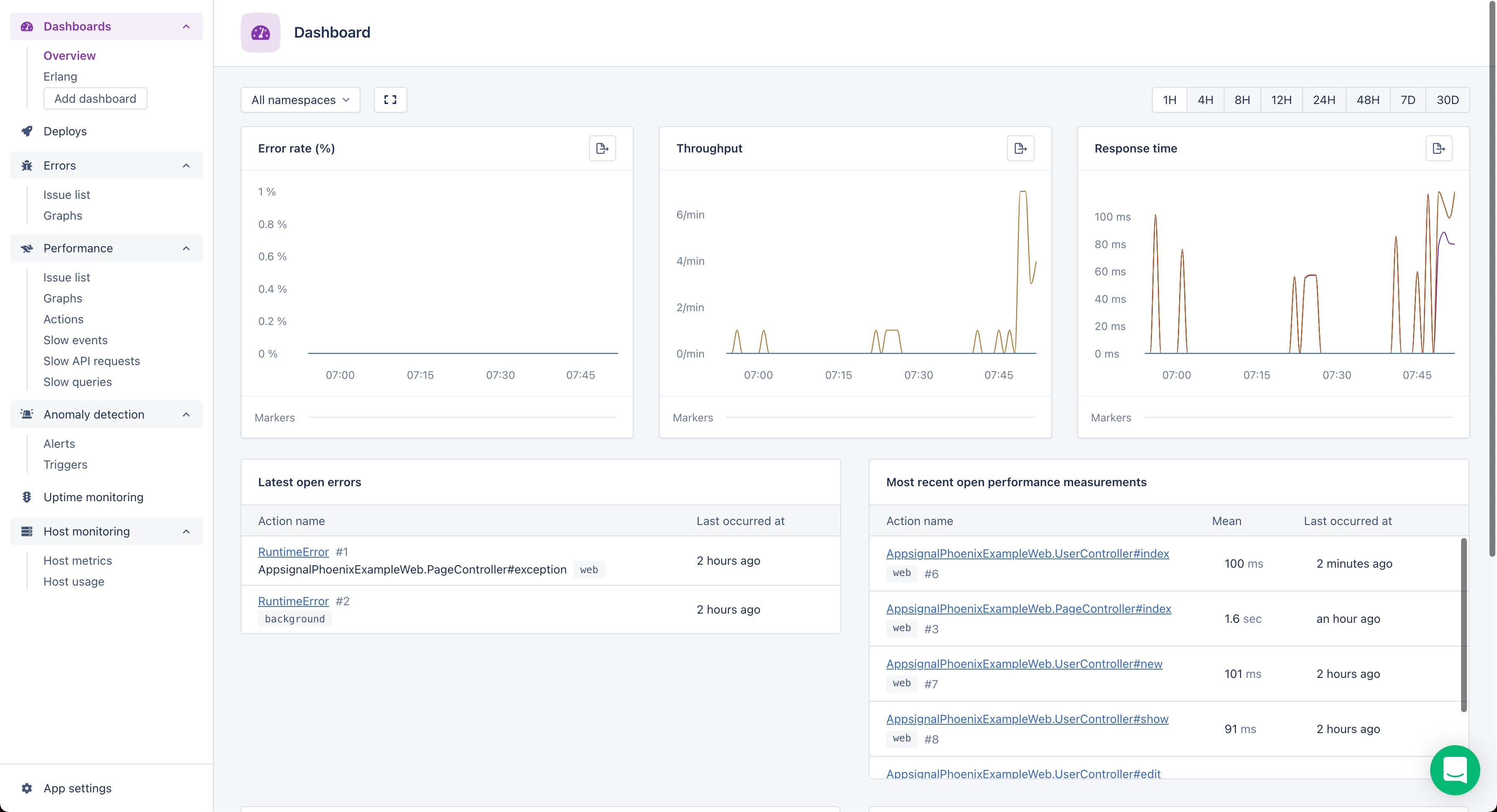

AppSignal is another great tool to collect performance data (among other things). [Adding AppSignal to an existing application (https://docs.appsignal.com/elixir/installation/#installing-the-package) takes a few seconds. Just install the appsignal dependency and run the appsignal.install mix task with an API key. It has a good set of default metric collection including the throughput and response times for the application.

You could even set up minutely probes to track custom metrics.

Here’s an example of the AppSignal dashboard collecting data from a sample application:

If you are looking to collect data about specific blocks of code that you suspect are slow, AppSignal’s instrumentation feature can help collect data about it. Just wrap the suspected code inside Appsignal.instrument/2 like this:

defmodule MyApp.PageController do

use MyApp, :controller

def index(conn, _params) do

custom_function()

render(conn, "index.html")

end

defp custom_function do

Appsignal.instrument("custom_function", fn ->

:timer.sleep(1000)

end)

end

endThis will show up on the AppSignal event graph tagged as custom_function. You can then explore this event across multiple calls and make an informed decision about the need for optimization.

Check out the full list of features on the AppSignal docs.

Wrap-up: Measure Your Elixir App’s Performance with Profiling

In this post, we saw how inbuilt tools like cprof, eprof and fprof can help gather performance insights for your code, even during production.

We also took a quick look at a couple of other tools to monitor and trace your applications: Phoenix Live Dashboard and Recon.

Until next time, happy profiling!

This article was originally posted on AppSignal Blog